Initial Survey of VANET Literature

Nathan Balon

A review of papers on vehicular ad hoc networks, identifying the emphasis of each paper:

- What problem is addressed?

- What solution is proposed?

- How do the solutions differ from previous solutions?

- What are the main contributions and conclusions?

The Broadcast Storm Problem in Mobile Ad Hoc Network, by S-Y. Ni, Y-C Tseng, Y-S. Chen, and J-P Sheu

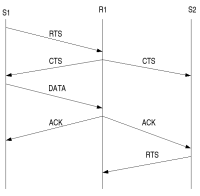

- The problem addressed is the using flooding to propagate a broadcast message throughout a network. The “broadcast storm problem” refers to the problem associated with flooding. First flooding results in a large number of duplicate packets being sent in the network. Second, a high amount of contention will take place, because nodes in close proximate of each other will try to rebroadcast the message. Third, collisions are likely to occur because the RTS/CTS are not applicable for broadcast messages.

- There are two ways to limit the problems caused by a broadcast storm. First, an approach can be taken that reduces the possibility of redundant rebroadcasts. Second, the time of rebroadcasts can be differentiated. The paper proposes the five schemes: probabilistic, counter-based, distance-based, location-based, and cluster-based schemes.

- Most others try to solve the problem by assigning time slots for the transmission of a broadcast message. The problem with assigning time slots is global synchronization is difficult to achieve in ad hoc networks.

- The simulations showed that a simple counter based implementation can eliminate a large number of redundant broadcasts in a dense network. The location-based scheme was found to be the best solution and the results showed that it worked well for a wide range of host distributions. The only drawback to the location-based scheme is a device such as a GPS receiver is needed.

Urban Multi-Hop Broadcast Protocol for Inter-Vehicle Communication Systems, by G. Korkmaz, E. Ekici, F. Ozguner, and U. Ozguner

- The problem addressed in the paper is the multi-hop broadcast of information in a Inter-Vehicle Communication (IVC) System. The methodology addresses the hidden node problem, the broadcast storm problem, and reliability of multi-hop broadcasting.

- The authors suggest creating an extension to IEEE 802.11. The problem is addressed by locating the furthest node in a direction without prior topology information. The furthest node is then responsible for forwarding the message and receiving an acknowledgment of the successful broadcast. The protocol also suggests the use of repeaters at intersection to eliminate the problems caused by the shadowing large buildings in an urban environment.

- Other protocols improve on blind flooding, but most of these methods are not effective for all node densities and packet loads. A number of solutions that have been proposed take a proactive approach to address these problems, but these solutions are not acceptable in highly mobile environments.

- Since the protocol obeys the rules defined by the 802.11 standard it can be used with other nodes that do not use this broadcast protocol. The simulations showed a high success rate when the network has a high packet load and a dense vehicle distribution.

Smart Broadcast Algorithm for Inter-Vehicle Communications, by E. Fasolo, R. Furiato, and A. Zanella

- The problem addressed is developing a broadcast protocol that provides high reliability and low propagation delay.

- The paper proposes a distributed, position aware “Smart Broadcast” algorithm. Each node that receive a broadcast forwards the packet after a random backoff that is determine based on the nodes position from the source. The algorithm makes use of GPS to speed up the propagation of a message.

- Little attention has been applied in designing efficient and reliable broadcast propagation algorithms.

- The simulation showed the algorithm performed well, approaching the performance bound of the MCDS-based solutions. A problem with the algorithm is the difficulty of setting some of the parameters used by the algorithm such as the contention window size.

Double-Covered Broadcast (DCB): A Simple Reliable Broadcast Algorithm in MANETs, by W. Lou and J. Wu

- The paper looks at ways to reduce broadcast redundancy in an environment that has a high error rate of transmission. Forwarding nodes form a connected dominating set; finding the minimum connected dominating set has been shown to be an NP-complete problem. The paper also addresses the issue of acknowledging a broadcast. The problem that exists is if all nodes were to send acknowledgments on the successful receipt of a broadcast packet the “ACK explosion problem” would occur.

- The double-covered broadcast (DCB) algorithm uses broadcast redundancy to improve the delivery ratio of broadcast message in an environment with a high error ratio. The algorithm works by only specified nodes in the sender’s 1-hop range forwarding the message. Forwarding nodes are selected that meet the following requirements the senders 2-hop neighbor set is fully covered the sender's 1-hop neighbors are either forward nodes or non-forward nodes but covered by at least two forwarding neighbors, the sender itself and one of the selected forward nodes. The retransmission by the forwarding nodes signals an acknowledgment of a message. If the sender does not receive the implicit acknowledgment the sender retransmits the broadcast.

- This process differs from other solutions in that set of forwarding nodes is selected from among the 1-hop neighboring nodes.

- The DCB algorithm is sensitive to node mobility. When the nodes in the network were highly mobile the performance of the algorithm dropped significantly, this algorithm may not be suitable for vehicular ad hoc networks for this reason. The reason is the broadcasting node needs to maintain a set of neighbors.

Selecting Forwarding Neighbors in Wireless Ad Hoc Networks, by G. Calinescy, I. Mandiou, P-J. Wan, and A. Zelikovskya

- The problem addressed in the paper is finding a forwarding set of the minimum size. Minimum Forwarding Set Problem: Given a source A, let D and P be the sets of 1 and 2-hop neighbors of A. Find a minimum-size subset F of D such that every node in P is within the coverage area of at least one node from F.

- Redundancy can be reduced by finding the minimum number of 1-hop neighbors that cover all 2-hop neighbors.

- The authors propose two algorithms for finding the minimum forwarding set. The first algorithm is a geometric O(n log n) factor-6 approximation algorithm. The second algorithm is a combinatorial O(n^2) factor-3 approximation algorithm. The algorithm improves on the previously best known algorithm by Bronnimann and Goodwhich which guarantees O(1) approximation in O(n^3 log n) time.

- The authors present a new algorithm to find the smallest subset of neighbors that cover all 2-hop neighbors.

ADHOC MAC: New MAC Architecture for Ad Hoc Networks Providing Efficient and Reliable Point-to-Point and Broadcast Services, by F. Borgonovo, A. Capone, M. Cesana and L. Fratta

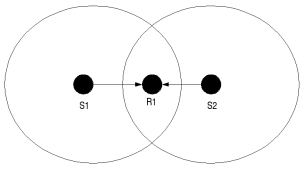

- The paper addresses the problem of reliable broadcast in wireless ad hoc networks. A reliable broadcast method is essential for exchanging location information in VANETs and for an adress resolution protocol (ARP). The hidden terminal problem and exposed node problem make it difficult to provide reliable broadcasts in wireless networks. This paper introduces a new protocol Reliable R-ALHOA (RR-ALHOA). The problem caused by the traditional flooding approach is if a message is broadcast to n nodes, than there will be n rebroadcasts of the message.

- The solution to the problem is a new MAC protocol called RR-ALHOA, which implements a dynamic time division multiple access (TDMA) mechanism that allows for nodes to reserve resources. The protocol uses a Basic Channel (BCH) that provides nodes with the knowledge of transmissions in overlapping segments. The information on the Basic Channel overcomes the problem of hidden terminals and exposed nodes by allowing nodes to have information of all nodes in its 2-hop range.

- The RR-AHLOA protocol is an extension to the R-ALHOA protocol.

- The results of the simulations run by the authors showed that the average channel setup delay was a few hundred ms.

MAC for Ad Hoc Inter-Vehicle Network: Services and Performance, by F. Borgonovo, L. Campelli, M. Cesana, and L. Coletti

- The paper analyzes the performance of the ADHOC MAC which is a protocol that was developed for the CarTALK 2000 project. ADHOC MAC provides a distributed reservation protocol to dynamically establish a reliable single hop broadcast channel (BCH).

- The paper does not provide any new solutions. The purpose of the paper is to analyze the performance of the broadcast channel.

- The solution doesn't differ from previous methods, the goal of the paper is to analyze the ADHOC MAC. protocol

- The main contribution of the paper is the analysis of the performance of 1-hop and multi-hop broadcast messages. The results showed that ADHOC MAC provided high performance in terms of access delay and radio resource reuse.

A Decentralized Location Based Channel Access Protocol for Inter-Vehicle Communication, by S. Katragadda, G. Murthy, R. Rao M. Kumar and S. R

- The paper addresses the problem of channel reuse in a vehicular ad hoc network. The efficient allocation of channels in an ad hoc network allows the network to become more scalable.

- The authors propose the Location Channel Access (LCA) protocol to assign a channel to a node in the network based on the nodes geographic position. Nodes are assigned a channel with a distributed algorithm based on the information that is collected from geo-location system such as GPS.

- A channel assignment method similar to the one used by cellular systems is applied to mobile ad hoc networks.

- The paper proposes a protocol to assign channels dynamically to a vehicle in a hoc network without the use of a central controller based on a vehicles location in the network.

Probabilistic Broadcast for Flooding in Wireless Mobile Ad Hoc Networks, by Y. Sasson, D. Cavin, and A. Schiper

- The problem addressed is improving the efficiency of a flooding algorithm, by using a probabilistic broadcast method.

- The authors use a probabilistic algorithm for relaying a broadcast message, where a node has probability p of rebroadcasting a message and 1 – p probability of taking no action in the rebroadcast of the message. The paper explores the possibility of applying phase transitions for selecting the probability of rebroadcasting a message. Phase transition is a well known phenomenon from percolation theory and random graphs.

- Many other studies try to optimize flooding in ad hoc networks by using a deterministic approach. This paper explores if a probabilistic approach which may be more suitable to ad hoc networks since they are highly dynamic.

- The study showed there is a difference between the ideal behavior and the results of the actual simulations. The authors found that probabilistic flooding does not exhibit a bimodal behavior as percolation and graph theory would suggest. Probabilistic flooding did increase the success rate of transmissions when network is densely populated. Some possible future work is to determine an algorithm to dynamically adjust the p probability. Another area of future research is to understand the effect that modifying the transmission range with regard to p.

A Differentiated Distributed Coordination Function MAC Protocol for Cluster-based Wireless Ad Hoc Networks, by L. Bononi, D. Blasi, and S. Rotolo

- The problem addressed in the paper is providing QoS for cluster-based networks. Clustering protocols produced a hierarchical network. The result of creating a hierarchy is it simplifies routing in a network since packets have to only be routed to a certain cluster. The problem the authors consider is assigning a service class to a node based on the role that the node performs in the cluster.

- The authors propose the Differentiated Distributed Coordination Function (DDCF) to support a distributed Mac and cluster scheme. DDCF is similar to IEEE 802.11e. The difference between the two is IEEE 802.11e differentiates the service on a per flow basis, while DDCF differentiates the service based on the role of a node.

- Most approaches to QoS assign a priority on a per flow basis. The authors suggest assigning a priority based on the role that a node plays in the network. For example a node that is elected as the head of a cluster would have a higher priority that the other nodes in the cluster. Also, nodes that are responsible for routing messages between clusters are given a higher priority.

- The authors show through simulations that DDCF is an effective distributed differentiation scheme. One assumption made is that the cluster head will be chosen based factors such as mobility. In a vehicular environment is likely that all vehicles will be highly mobile so this solution may not apply.

An Adaptive Strategy for Maximizing Throughput in MAC Layer Wireless Multicast, by p. Chaporkar, A. Bhat, and S. Sarkar

- The problem addressed is providing multicast support at the MAC layer for wireless ad hoc networks. A problem with multicasting is that as the throughput increases the stability of the network decreases, so there is a trade of between stability and throughput.

- The authors designed a policy that determines when a sender should transmit. The goal of the policy is to maximize throughput and at the same time maintain the stability of the network. A MAC protocol was design that acquires the local information in order to execute the policy. One problem that is addressed is transmission in wireless network is essentially a broadcast, but all nodes in the sender region may not be able to receive a message sent from the sender because of they currently engaged in communication with other nodes in the network. A policy can be used to determine if the transmission should occur immediately or should the transmission be postponed till more nodes will be able to receive the message. The transmission policy is based on the queue length of the sender and the number of available receivers.

- Most of the previous research in wireless multicasting was concerned with the transport and network layers.

- The simulations performed show that the authors approach outperform the exist methods used in wireless multicasting. Some open problems are (a) coordinating the transmissions from different nodes to maximize performance (b) interaction between the proposed protocol and the transport layer and (c) optimizing the performance in presence of mobility.

Broadcast Reception Rates and Effects of Priority Access in 802.11-Based Vehicular Ad Hoc Networks, by M. Torrent-Monero, D. Jiang, and H. Hartenstein

- The main problem that is addressed in the paper is how well broadcast performance scales. The authors study three areas: what is the probability that a broadcast message is received based on the intended receivers distance from the sender, how can the reception rate be improved for emergency warnings and the effect of using different models in the simulation.

- The authors propose use a priority access mechanism based on 802.11e to give access to nodes with a high priority such as a vehicle transmitting an emergency warning. In this scenario the probability of reception is measured using a two-way ground model and the Nakagami model.

- The authors work differed from some of the previous work done in the area in that a non-deterministic propagation model was used for the simulations.

- Using the two-way ground model large gains were achieved in the reception rates of emergency messages. Using the Nakagami model the authors found the reception rates were much worse. Multi-hop relaying and retransmission strategies may be used to increase the reception rate of emergency messages. More realistic radio models are need for the simulation of wireless ad hoc networks. Topology control mechanisms need to be created for non-deterministic models, such as varying the power level of a node. More insight into the why the results were much worse with non-deterministic model are needed, such as determining if radio power fluctuation or node mobility were the main contributor to the poor results.

Vehicle-to-Vehicle Safety Messaging in DSRC, by Q. Xu, T. Mak, and R. Sengupta

- The design of a MAC protocol to send vehicle to vehicle safety messages is investigated. The paper address the need to give safety messages a higher priority than non-safety messages.

- MAC protocol is developed that based on 802.11a which allows messages to be prioritized. The protocol was then simulated using the Friis and two-ray models.

- The paper addressed the need of creating a protocol to send safety messages in DSRC with varying priorities.

- The authors found that the protocol will should be feasible if network designers and safety application designers work together. In 200 ms a vehicle should be able to collect information from 140 vehicles in its surrounding. The average time it takes for a driver to react to an accident is 0.7 seconds. The area of intersection communication is an area of future work. Additional adaptive control at the MAC and physical layer is needed. Also, further characterization of the classes of messages is needed.

The Challenges of Robust Inter-Vehicle Communications, by M. Torrent-Moreno, M. Killat, and H. Hartenstein

- The paper addresses the adverse channel conditions in ad hoc vehicular networks. Some of the problem associated with VANETs is the received signal strength fluctuation, high channel load, and high mobility. The hidden terminal problem is the main cause of poor performance in vehicular ad hoc networks.

- The solution that is proposed is to use more realistic models to design vehicular ad hoc networks. A probabilistic model should be used when design communication protocols for vehicular ad hoc networks.

- The paper addresses the challenges of using different types of messages, such as broadcast messages, event driven messages, and the problem associated with bidirectional links.

- More realistic models need to be developed to properly model communication protocols.

A Vehicle-to-Vehicle Communication Protocol for Cooperative Collision Warning, by X. Yang, J. Liu, F. Zhao, and N. Vaidya

- A communication protocol for cooperative collisions warning messages is proposed in the paper. A major channel in the construction of an emergency warning protocol is ensuring the timely delivery of messages.

- The authors of the paper propose the Vehicular Collision Warning Communication (VCWC) protocol. The emergency warning protocol uses congestion control policies, service differentiation mechanisms and methods for emergency message dissemination. Congestion control is achieved by using a rate adjustment algorithm. The goal of the protocol is to develop a communication protocol that does not require too much overhead.

- A protocol that can be used to disseminate emergency warning and reduce the amount of congestion in the network is the aim of the proposed solution.

- The authors concluded that their proposed solution allows for low latency of emergency warning messages. The protocol also reduces the number of redundant emergency warning messages.

Fair Sharing of Bandwidth in VANETs, by M. Torrent-Monero, P. Santi, and H. Hartenstein

- A problem with wireless networks is there is limited amount of bandwidth. There arFair Sharing of Bandwidth in VANETs two types of safety messages in VANETs. First, periodic messages alert other vehicles in the area of the vehicles state. Second, emergency warnings are triggered by a non-safe driving condition. When the number of nodes sending periodic broadcasts is too large, because of high vehicle density, emergency warning messages will have take a greater amount of time to be received.

- The authors of the paper propose the Fair Power Adjustment Algorithm (FPAV), which is a power control algorithm that finds the optimum transmission range of a node. The problem is presented in terms of a max-min optimization problem. When an emergency condition arises the periodic messages should be limited. First, the FPAV algorithm maximizes the minimum transmission range of all nodes using a synchronized approach. Second, the algorithm maximizes the transmission range of all nodes individually while keep the network under a certain load.

- The paper addressed the issue of broadcasting safety messages in a densely populated network.

- The goal for future work of the algorithm is implement the algorithm fully distributed, asynchronous, and localized.

Site Feed

Site Feed